Pressmeddelande -

Creating Natural Speech and Motion with Probabilistic Models

Speech and motion synthesis – areas surprisingly close to philosophy, are the special interests of WASP Assistant Professor Gustav Eje Henter: “I have always been interested in understanding human nature. If we are able to create something indistinguishable from a human, we must have understood something about ourselves.”

Artificial intelligence and machine learning are often used to interpret or to see patterns in large sets of data. Gustav Eje Henter, WASP Assistant Professor at KTH Royal Institute of Technology, instead uses machine learning in the opposite direction, to generate new data in the form of synthetic speech and motion.

Already before he started on the master’s programme in Engineering Physics at KTH Royal Institute of Technology, Gustav was interested in turning abstract mathematical models into something that could be listened to, even if this mostly just produced weird sounds.

Another piece of the puzzle came from his exchange at UBC in Canada, where he saw a poster that featured a quote by E.T Jaynes*, which awakened a thought that would later play a big role in his research; “we can make good and rational decisions using probability theory and statistics.” This influenced him to study machine learning. For his PhD, he joined this with his interest in sound by building mathematical and statistical models to create speech.

“It turned out it wasn’t quite that easy to solve all problems about decisions. In reality, things are never as easy as point A leads to point B; it is much more complex. But probability has still been very useful in science and engineering.”

After his doctoral studies, Gustav continued out in the world to do a postdoc. He first landed at the famous speech-synthesis group at the University of Edinburgh, and later at the National Institute of Informatics in Tokyo, Japan. After five years abroad he returned to KTH as a postdoc and in January 2020 he was promoted to Assistant Professor with support from WASP.

Defining what is natural sound

“During my PhD, I supervised an assignment where the students built a computer program that could recognise 10 different words. For the final lecture in the course, I showed the students how to turn their recognisers around, and make them talk instead. Even the best systems sounded terrible. This was an eye-opener that got me interested in understanding why – why did it sound so bad? And that question has defined a big part of my research journey.”

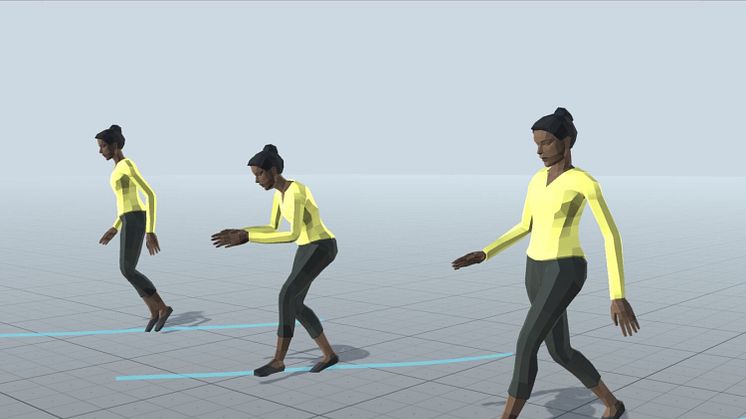

With machine learning and probabilistic modelling, Gustav Eje Henter creates avatars that speak, move and gesture. Especially, he seeks to enable machines to both identify the different ways that a human might speak a given text or perform a specific motion, and also reproduce these. For this purpose, he uses probabilistic modelling, a mathematical theory that describes several possible outcomes from the input data. In opposite to the traditional deterministic model that only finds one average value – the average way of speaking and moving – the probabilistic method gives a large variety in voice and movement since it allows variation.

“I have always been interested in understanding human nature. If we are able to create something indistinguishable from a human, chances are we must have understood something about ourselves.” says Gustav Eje Henter.

He continues:

“Today, with machine learning, our computers are much better at recreating humans than we or our computers are able to understand humanity.

It is easy for a human to say if a speech sample or a gesture is good or bad. It is much more difficult to make a computer understand; will this be perceived as human? They do not understand human preferences and only learn to imitate what we do. Although we are getting better at simulating humans and our preferences, the real question – to truly understand and explain what is human and what is not – is still far from being solved.”

While talking one should also breathe

In a current project, Gustav and his co-workers aim to combine audio-visual elements and text to create an avatar that, from only text input, can read the text out loud and gesture like a natural speaker.

“We have trained our system with spontaneous speech and motion from a real person. When we asked the system to speak, we could hear it take breathing pauses, meaning the system had learnt that when speaking one should also breathe, and when to do it. We are on a good way to put this all together to generate a, close to, natural speaking and gesturing avatar that varies voice and movement on its own. But we can’t explain in words and mathematics why its behaviour is perceived as natural.”

When talking about synthetic speech and speech recognition, one application often mentioned is robots and artificial humans. The film and gaming industries are other examples of application domains interested in both synthetic speech and in body motion for animation. Besides commercial interests this research is also desired within different scientific fields.

Gustav Eje Henter works in a multi-disciplinary group at the division of Speech, Music and Hearing, at the School of Electrical Engineering and Computer Science. In this environment, synthetic speech and speech recognition are central objects and tools for several communities, for example phoneticians and other scientists trying to understand how humans produce and perceive speech.

“In the beginning of speech technology, we learned a lot from the phonetics field when building the first speaking machines. Now, thanks to technical advancements in machine learning and speech synthesis, we can start to give back to the phoneticians. They are still often using robot-like synthetic voices and we can offer them something that can be used in their research but that is closer to natural speech. I think this might aid to advance the phonetics field and not only simulate us humans, but also understand ourselves.”

WASP fundamental enabler

Participating in the WASP program is something Gustav Eje Henter finds very rewarding, even though entering just before the Covid-19 breakout has had its impact.

“WASP is a fundamental enabler for developing my research. I have two doctoral students on their way in and will later hire a postdoc, this wouldn’t be possible without WASP.”

“I have also gained the connection with a large network of researchers all over Sweden. Unfortunately, a pandemic came a bit in the way, but still I have found colleagues at Chalmers I wouldn’t have met outside the WASP program and I have learnt about research at KTH I wasn’t aware of. I am convinced that participating in this large community will be even more rewarding further on when everyone can meet in person again. Meeting people is something I think is very valuable, exchanging perspectives with others teaches you a lot.”

Relaterade länkar

Ämnen

Kategorier

The Wallenberg AI, Autonomous Systems and Software Program (WASP) is a major national initiative for strategically motivated basic research, education and faculty recruitment. The ambition of WASP is to advance Sweden into an internationally recognized and leading position in the areas of artificial intelligence, autonomous systems and software.